Dr. José María Simón Castellví

José María Simón Castellví, a member of the Sociedad Española de Oftalmología, Corresponding Member by award of the Real Academia de Medicina de Cataluña, Honorary President of the Federación Mundial de Sociedades Médicas Católicas, and a Numerary Member of both the Instituto Médico Farmacéutico de Cataluña and the Royal European Academy of Doctors (READ), addresses the fundamental epistemological challenges posed by tools based on generative artificial intelligence—particularly in sensitive domains such as medicine and religion—in the article «An Unresolved Point in Artificial Intelligence,» published in FIAMC media outlets.

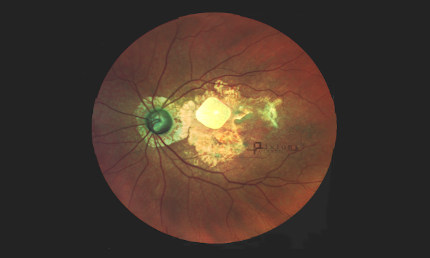

According to the academic, one of the central problems of this disruptive technology is its inherent inability to distinguish between verifiable facts and opinions or beliefs, given that such systems are trained on large datasets that mix empirical information with subjective interpretations. In the medical field, the author explains, this limitation may lead to biases or errors if a model processes objective clinical data—such as test results—indiscriminately alongside professionals’ subjective notes, potentially affecting diagnoses or treatment recommendations when a clinical inference is confused with a biological fact.

Similarly, in religious references—where artificial intelligence must contend with notions that do not conform to scientific criteria, such as revelations or doctrines of faith—these systems cannot differentiate between texts considered canonical and secondary theological analyses. As a result, such tools may generate responses that merge doctrines with speculative interpretations without respecting the conceptual hierarchies intrinsic to these fields, since artificial intelligence operates as a system of statistical pattern correlation rather than as an intelligence capable of understanding complex contexts or hierarchical values of knowledge.

Similarly, in religious references—where artificial intelligence must contend with notions that do not conform to scientific criteria, such as revelations or doctrines of faith—these systems cannot differentiate between texts considered canonical and secondary theological analyses. As a result, such tools may generate responses that merge doctrines with speculative interpretations without respecting the conceptual hierarchies intrinsic to these fields, since artificial intelligence operates as a system of statistical pattern correlation rather than as an intelligence capable of understanding complex contexts or hierarchical values of knowledge.

For Simón Castellví, only human intelligence currently integrates conscious understanding, emotional awareness, and normative judgment—capacities that present-day generative artificial intelligence cannot develop. He concludes that, despite evident and ongoing technical advances, reliance on these tools—especially in domains involving ethical, medical, or religious judgments—must be accompanied by human oversight. “In conclusion, we can affirm that trust in AI in the domains discussed requires continuous human supervision to provide context, wisdom, and moral and empirical discernment in order to distinguish what is irrefutably true or highly probable from what is merely a perspective or a belief. This is the limit of algorithmic reason when confronted with the complexity of the fascinating human experience,” the academic concludes.